WARNING:tensorflow:From /Users/hiro/anaconda3/envs/py367/lib/python3.6/site-packages/tensorflow/python/framework/op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

WARNING:tensorflow:From /Users/hiro/anaconda3/envs/py367/lib/python3.6/site-packages/keras/backend/tensorflow_backend.py:3445: calling dropout (from tensorflow.python.ops.nn_ops) with keep_prob is deprecated and will be removed in a future version.

Instructions for updating:

Please use `rate` instead of `keep_prob`. Rate should be set to `rate = 1 - keep_prob`.

_________________________________________________________________

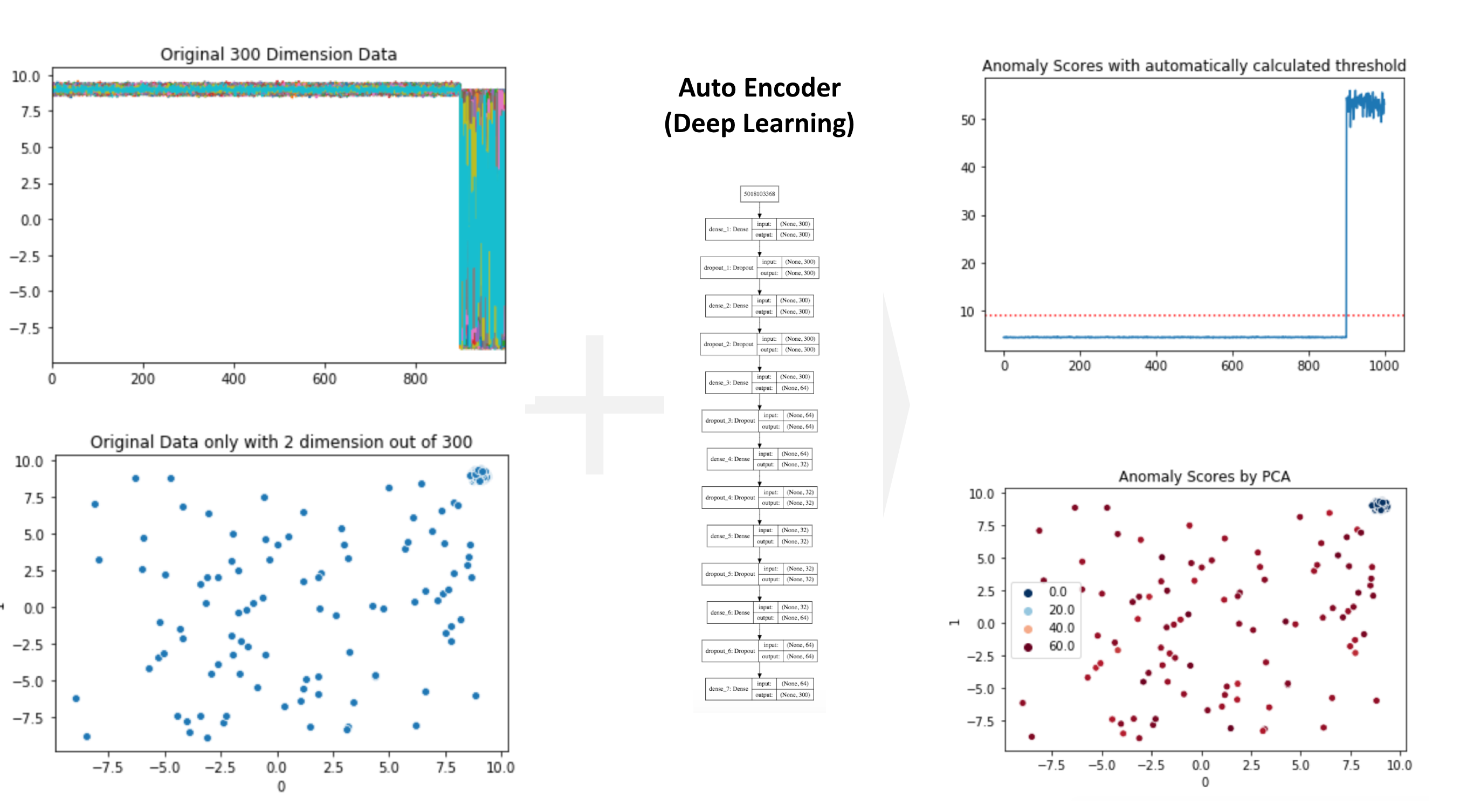

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 300) 90300

_________________________________________________________________

dropout_1 (Dropout) (None, 300) 0

_________________________________________________________________

dense_2 (Dense) (None, 300) 90300

_________________________________________________________________

dropout_2 (Dropout) (None, 300) 0

_________________________________________________________________

dense_3 (Dense) (None, 64) 19264

_________________________________________________________________

dropout_3 (Dropout) (None, 64) 0

_________________________________________________________________

dense_4 (Dense) (None, 32) 2080

_________________________________________________________________

dropout_4 (Dropout) (None, 32) 0

_________________________________________________________________

dense_5 (Dense) (None, 32) 1056

_________________________________________________________________

dropout_5 (Dropout) (None, 32) 0

_________________________________________________________________

dense_6 (Dense) (None, 64) 2112

_________________________________________________________________

dropout_6 (Dropout) (None, 64) 0

_________________________________________________________________

dense_7 (Dense) (None, 300) 19500

=================================================================

Total params: 224,612

Trainable params: 224,612

Non-trainable params: 0

_________________________________________________________________

None

WARNING:tensorflow:From /Users/hiro/anaconda3/envs/py367/lib/python3.6/site-packages/tensorflow/python/ops/math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.cast instead.

Train on 900 samples, validate on 100 samples

Epoch 1/30

900/900 [==============================] - 1s 1ms/step - loss: 409.0245 - val_loss: 283.5180

Epoch 2/30

900/900 [==============================] - 0s 168us/step - loss: 245.5365 - val_loss: 171.2701

Epoch 3/30

900/900 [==============================] - 0s 167us/step - loss: 111.1675 - val_loss: 76.3028

Epoch 4/30

900/900 [==============================] - 0s 180us/step - loss: 65.6427 - val_loss: 59.7676

Epoch 5/30

900/900 [==============================] - 0s 165us/step - loss: 48.5084 - val_loss: 51.0341

Epoch 6/30

900/900 [==============================] - 0s 166us/step - loss: 38.3552 - val_loss: 45.0255

Epoch 7/30

900/900 [==============================] - 0s 164us/step - loss: 31.8401 - val_loss: 41.7299

Epoch 8/30

900/900 [==============================] - 0s 164us/step - loss: 27.0036 - val_loss: 38.4174

Epoch 9/30

900/900 [==============================] - 0s 161us/step - loss: 23.6374 - val_loss: 36.7532

Epoch 10/30

900/900 [==============================] - 0s 158us/step - loss: 21.0485 - val_loss: 34.0263

Epoch 11/30

900/900 [==============================] - 0s 161us/step - loss: 19.0564 - val_loss: 33.1850

Epoch 12/30

900/900 [==============================] - 0s 159us/step - loss: 17.1225 - val_loss: 33.4618

Epoch 13/30

900/900 [==============================] - 0s 159us/step - loss: 15.9848 - val_loss: 31.3626

Epoch 14/30

900/900 [==============================] - 0s 164us/step - loss: 14.8303 - val_loss: 31.7367

Epoch 15/30

900/900 [==============================] - 0s 164us/step - loss: 13.7908 - val_loss: 31.2365

Epoch 16/30

900/900 [==============================] - 0s 160us/step - loss: 12.9701 - val_loss: 31.1924

Epoch 17/30

900/900 [==============================] - 0s 159us/step - loss: 12.3456 - val_loss: 30.0029

Epoch 18/30

900/900 [==============================] - 0s 169us/step - loss: 11.6284 - val_loss: 29.8570

Epoch 19/30

900/900 [==============================] - 0s 163us/step - loss: 11.2143 - val_loss: 29.3987

Epoch 20/30

900/900 [==============================] - 0s 159us/step - loss: 10.6979 - val_loss: 29.3927

Epoch 21/30

900/900 [==============================] - 0s 179us/step - loss: 10.3287 - val_loss: 28.8785

Epoch 22/30

900/900 [==============================] - 0s 163us/step - loss: 9.9254 - val_loss: 28.8537

Epoch 23/30

900/900 [==============================] - 0s 163us/step - loss: 9.5745 - val_loss: 28.8735

Epoch 24/30

900/900 [==============================] - 0s 181us/step - loss: 9.2493 - val_loss: 28.1487

Epoch 25/30

900/900 [==============================] - 0s 164us/step - loss: 8.8706 - val_loss: 28.4695

Epoch 26/30

900/900 [==============================] - 0s 168us/step - loss: 8.5600 - val_loss: 28.3033

Epoch 27/30

900/900 [==============================] - 0s 163us/step - loss: 8.3266 - val_loss: 28.2146

Epoch 28/30

900/900 [==============================] - 0s 168us/step - loss: 8.0563 - val_loss: 27.9876

Epoch 29/30

900/900 [==============================] - 0s 157us/step - loss: 7.9045 - val_loss: 28.0378

Epoch 30/30

900/900 [==============================] - 0s 154us/step - loss: 7.6259 - val_loss: 27.9198

Comments

Comments powered by Disqus